It could be argued that merely computing result is half of the equation. The best strategy to avoiding errors in supporting systems is to invoke in-process validation. Intermediate solutions could be validated against known proven patterns. When errors occur, the system has the potential to identify patterns in data that could potentially hit edge cases and cause incorrect behavior. Persistence could be delayed or asynchronous and never inline to the process. The cost of the validation at runtime is the cost of pushing, possibly as small a simple marker to identify current state. A checkpoint could be derived from such a marker.

The result of analyzing this data could be optimization of the data itself; in addition, programmers could see the sections of code that produced the result/condition to optimize the code. Combining the call chain information with the data associated with the checkpoint, it should be possible to re-run data to recreate errors.

Knowing where an error is happening and being able to see it happen through recreation could streamline debug operations. Depending on how fast the data is actually analyzed this information could be used at runtime to alert to potential issues.

In terms of football, what you are looking for is if the "pass" of information from A to B was "completed". It's not enough to touch the ball (data) you have to catch it and "make a football move". This means you have to receive it in a processable state. The validation query could take the form of confirming a get operation returns the expected state or more complicated calculations.

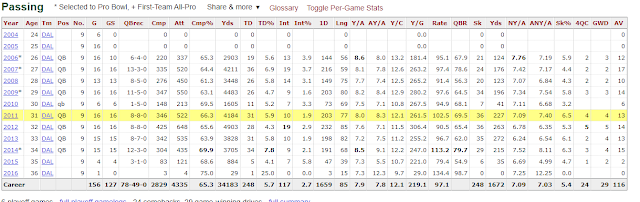

It is hoped that validation helps improve completion rate (error rate). As impressive as 65.3 is for career completion rate, (sorry Tony) we are looking for 100.00% or at least a path for evolving to 100.00%

No comments:

Post a Comment