Warning Experimental Concept - this an exploration on how to minimize data transfer while getting the benefits of containerization and microservices.

There are are two major paradigms for cloud computing today. This article proposes another option, a third paradigm for the execution of a series of known operations in order.

In the first paradigm, services are fronting pods where the actual calculations occur. Data is pulled and pushed to central data source and also sent from service to service in method calls from service to service.

In the second paradigm, calculations are done in lambda functions and data is moved back and forth to data store in between functions.

In the third paradigm, data is pulled into RAM in a pod and then containers process the data and put results into the shared RAM.

Note the pod boundary represented by the square. This is located on a single node. The data does not move as much as in the other paradigms.

For workflows that execute a know set of operations, this pattern might have more applicability.

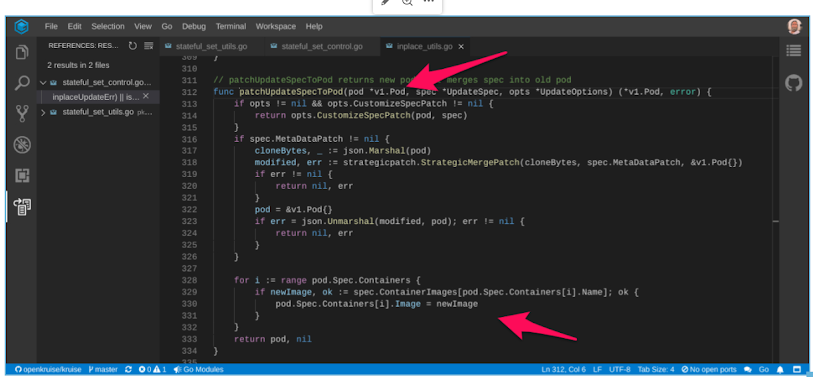

Note: this is not a Job from the kubernetes batch API. The pods here would be based on something similar to the openKruise advanced statefulet.

In this type of resource you can submit patches to change containers without reloading the pod. This could make it possible to have chains of containers sharing information in the pod scratch space or in local SSD with one container using the output of the previous container as input.

Image taken from OpenKruise source NOTE: Openkruise states there is issue with kubelet reloading container too quickly and have provided a configurable wait period to compensate So, instead of transferring data from service to service as in traditional kubernetes (paradigm 1) or in and out of lamba functions (paradigm 2), you now keep the data on a single node and change the containers that processing it.

The data is like the car on an assembly line. Each container takes its turn transforming the data.The limit on number of containers in a pod would be determined by a combination of the number of compute bound operations and the number of io bound operations. In an example compute bound pod, the limit on the number of containers in a pod on AWS would be near 128 (an x1.32xlarge). This is assuming you need all 128 working in parallel. If you had a mixture of compute bound and io bound operations then this number could be decreased.Pods would be deployed via statefulset and thus would be addressable per pod. Each pod would execute a graph of operations in the mesh of containers. This layout changes failover semantics: For a failing container: data is stored at the pod level (SSD on node or scratch disk) resumption should be possible at the latest checkpoint.

For a failing pod: data is stored at node level (SSD) and resumption should be possible at the latest checkpoint

For a failing node: "major" checkpoints could be persisted to a persistent volume located in the data center.Scaling would be still be by the stateful set scaling - but each pod would be assigned a different sharded data set.Update: It would be a mistake to think that the entire system should be factored into "pod pipelines" like this. (once you have this hammer EVERYTHING is not a nail) There will still probably be the need for regular services that the "pod pipeline" calls out to. (Even with assembly line every part is not made in the same factory). The objective is not to do everything in the assembly line. The object is to eliminate unnecessary data transfer.Maybe this is called the "pod pipeline pattern". You may be thinking why not put all the resources into 1 container and just call different functions? Part of the idea behind containers was to be able to get something like lambda or microservices (having tiny containers that do one thing) without giving up data locality. Knative could provide an alternative answer... an application of KNative where you could specify which node to deploy on. This would handle the redeployment of containers part of the issue, but you would be giving up the scratch space with it's ability to share data between containers via RAM. Data sharing would only be via SSD. Another approach to keep the code modular could be to host several processes in one container - making it a docker image creation issue. In this way you could still modify the shape the of graph during processing and keep the ability to share data via the pod. Updates would still be possible, although quite a bit more complicated - a single change would affect many containers.

No comments:

Post a Comment